Securing AI Innovation: Enterprise Strategies for LLM and Generative AI Security

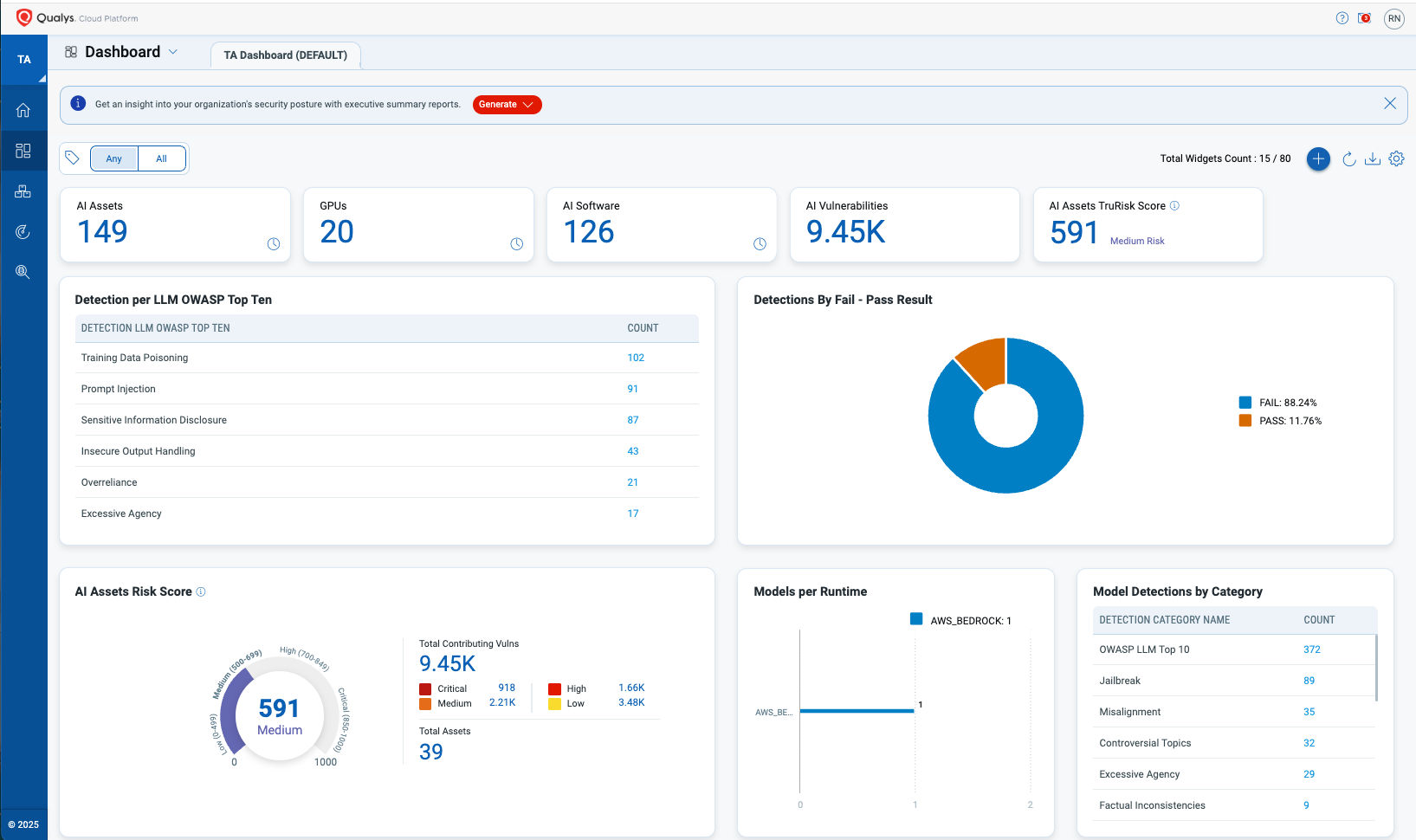

The adoption of Large Language Models (LLMs) and Generative AI is revolutionizing enterprise operations, delivering unmatched innovation, efficiency, and competitive advantage. However, this rapid integration brings significant AI security challenges that organizations must address. Insights from Qualys show that over 1,255 organizations have deployed AI/ML software across 2.8 million assets, with 6.2%—approximately 175,000 assets—classified as critical and at high risk for cyber threats.

As businesses increasingly leverage AI/ML to optimize operations, improve decision-making, and deliver personalized experiences, protecting these critical assets has become crucial. The expanding AI attack surface demands robust security solutions to safeguard AI investments, ensure compliance, and build trust in these transformative technologies. A recent whitepaper, “Securing the Future: TotalAI for LLM and Generative AI Workloads,” explores these challenges and offers actionable strategies to secure AI systems while fostering responsible innovation.

With AI adoption projected to add $15.7 trillion to the global economy by 2030, industries like healthcare, financial services, and retail are leading the charge. However, this rapid growth exposes organizations to emerging vulnerabilities that require advanced security measures and proactive risk management.

Top Critical Security Challenges for LLMs and Generative AI

As generative AI and LLMs take center stage in the digital transformation journey, their capabilities are matched by the complexity of the security challenges they introduce. From safeguarding sensitive information to meeting stringent compliance requirements, organizations are struggling to adopt a holistic approach to protecting their AI investments. However, with a focus on innovation and trust, you can address these concerns head-on.

Here, we outline the critical security risks organizations must navigate to responsibly harness the power of generative AI.

1. Data Privacy Risks

One of the most pressing concerns is the inadvertent exposure of sensitive data. According to research, 55% of data leaders rank sensitive information exposure as a top concern. LLMs can unintentionally memorize sensitive data, which, coupled with risks like inference attacks, put confidential information at significant risk.

2. Adversarial Attacks

Adversarial attacks, such as evasion, poisoning, and model inversion, threaten the integrity of AI models. These attacks can lead to incorrect or malicious outputs, undermining the trustworthiness of AI systems.

3. Intellectual Property Risks

Model theft and reverse-engineering are growing concerns, especially for organizations that rely on proprietary algorithms and datasets. Safeguarding these assets is critical to maintaining competitive advantage.

4. Compliance Complexities

Regulatory requirements, such as those outlined in the EU AI Act demand transparency, fairness, and ethical governance, adding another layer of complexity to managing AI systems.

Qualys TotalAI: A Comprehensive Security Solution

To address these challenges, Qualys TotalAI offers a unified approach to securing AI systems, combining advanced features with seamless integration into existing infrastructure.

Advanced Protection Features

Automated AI asset discovery provides comprehensive visibility into AI models, datasets, and infrastructure, ensuring organizations have a clear understanding of their AI ecosystem. Specialized risk assessments address critical vulnerabilities, including OWASP Top 10 LLM risks like prompt injection and data leakage. Additionally, real-time monitoring enables continuous detection and rapid response to emerging threats, strengthening overall security.

Infrastructure Security

Qualys TotalAI delivers comprehensive security for the infrastructure supporting AI deployments. It safeguards containerized AI workloads by addressing misconfigurations and vulnerabilities, ensuring reliable performance. Cloud configuration assessments identify and remediate risks in cloud-hosted AI environments, minimizing exposure. Additionally, automated patching streamlines patch management, enabling efficient and scalable protection for critical AI infrastructure.

Sensitive Data Detection

TotalAI leverages advanced scanning and contextual analysis to identify and mitigate the exposure of Personally Identifiable Information (PII) and other sensitive data within AI systems. By detecting patterns and analyzing data in context, it ensures that sensitive information is safeguarded against leakage or unauthorized access. This robust approach reduces security risks and helps organizations maintain compliance with regulations such as GDPR and CCPA. With TotalAI, enterprises can confidently manage sensitive data while fostering trust and ensuring responsible AI usage.

Future-Ready Security

The dynamic nature of AI-driven threats calls for innovative and adaptive security solutions. Discover how continuous monitoring, predictive analytics, and seamless infrastructure integration can help you confidently secure AI assets, mitigate risks, and ensure compliance.

Get the full whitepaper for actionable strategies.