Guard Against GenAI and LLM Risks from Development to Deployment with Qualys TotalAI

Artificial intelligence is fundamentally reshaping the enterprise. From automating customer service to accelerating code generation, large language models (LLMs) are rapidly becoming embedded in how businesses operate and compete. But as organizations embrace this innovation, they are also opening the door to new, hard-to-detect risks.

According to a recent study, 72% of CISOs are concerned that generative AI could introduce security breaches into their environment. And with nearly 70% of enterprises planning to deploy LLMs in production within the next year, the gap between AI innovation and AI security is growing fast.

At the heart of this challenge is the evolving AI attack surface. Today’s threats range from prompt injection attacks and sensitive data leaks to model theft and multimodal exploits hidden within images, audio, or video files. And because many security teams still lack visibility into where AI models are deployed — or whether they even exist in the environment — risks are often discovered only after damage is done.

Qualys TotalAI is designed to take on the challenge of securing all types of LLMs in your organization.

Solving the Visibility and Control Crisis in AI Security

The first challenge most organizations face isn’t just managing AI risks — it’s finding them. Even before worrying about shadow or forgotten LLMs, many security teams lack a basic inventory of the AI models already in use. Today, the problem spans both known and unknown models, with foundational visibility missing from the start. Security leaders tell us they’re often discovering new or unapproved AI assets only after audits, incidents, or breaches — while struggling to navigate fragmented tools that provide scattered data but little actionable insight.

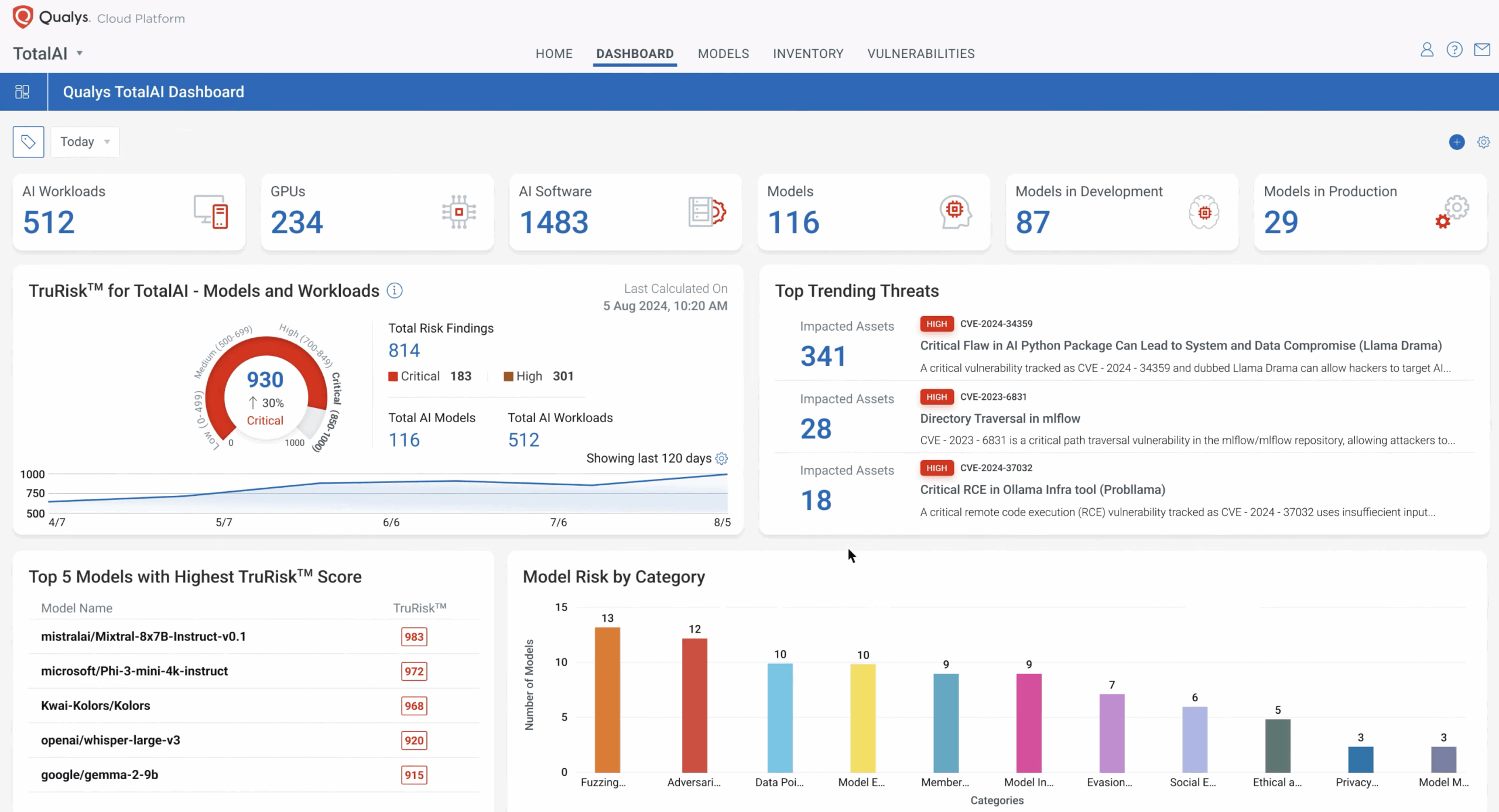

Qualys TotalAI addresses this head-on by delivering unified visibility across the AI stack — identifying where models run, which packages and hardware support them, and what vulnerabilities or exposures exist. Fingerprinting capabilities span software, GPUs, and LLM endpoints across on-premises and multi-cloud environments.

But visibility is just the start. In today’s AI landscape, proactive protection is critical.

Built for the Realities of AI Risk

The risks tied to AI and LLMs aren’t theoretical anymore — they’re happening right now. In one striking example, hackers used an AI chatbot to manipulate a dealership’s pricing engine and walk away with a brand-new SUV for just $1. While this targeted a specific business, the broader tactic — using malicious prompts to exploit model behavior — is becoming a serious threat across industries.

Other real-world attacks are escalating quickly. In a recent campaign dubbed “LLMjacking,” threat actors stole cloud authentication credentials to hijack enterprise AI resources and monetize stolen compute power. Similarly, hackers have been caught selling illicit access to Azure-hosted LLM services on dark web marketplaces — exposing critical cloud environments to unauthorized users, intellectual property theft, and compliance violations.

Without the right testing and risk management in place, enterprises face real exposure — from compromised pricing models to hijacked cloud-based AI infrastructure and manipulated customer platforms.

That’s why Qualys TotalAI is built differently. Unlike traditional vulnerability scanners, TotalAI is purpose-built for the unique realities of AI risk. It goes beyond basic infrastructure assessments to directly test models for jailbreak vulnerabilities, bias, sensitive information exposure, and critical risks mapped to the OWASP Top 10 for LLMs.

Findings are aligned to real-world adversarial tactics using MITRE ATLAS, then automatically prioritized through the Qualys TruRisk™ scoring engine — helping teams across the business quickly zero in on the most urgent and business-critical risks.

By taking this risk-led approach, TotalAI not only finds AI-specific exposures — it helps teams resolve them faster, protect operational resilience, and maintain brand trust as AI adoption accelerates across every industry.

Major New Updates in Qualys TotalAI

With the latest major updates, TotalAI expands its leadership in securing LLMs across the full pipeline:

- Internal On-Premises LLM Scanner:

Organizations can now perform comprehensive security testing of their LLMs that are hosted on-premises with internal access only — without exposing models externally. Using the latest functionality in the TotalAI internal LLM scanner, customers can manually incorporate model security testing into their CI/CD workflows. This shift-left approach enables engineering teams to detect jailbreak vulnerabilities, data leaks, and model misalignments early in the development cycle, giving them more time to retrain and harden models before deployment — all while maintaining full control behind corporate firewalls. - Expanded Jailbreak Detection:

TotalAI now detects a total of 38+ jailbreak and prompt manipulation attack scenarios, with 12 additional attacks added in the latest release. These expanded techniques simulate real-world adversarial tactics — including prompt injections, content evasion, multilingual exploits, and bias amplification — helping organizations harden their LLMs and prevent attackers from manipulating outputs or bypassing model safeguards. With the upcoming release, TotalAI will expand coverage even further to a total of 40 jailbreak scenarios. - AI Supply Chain Protection:

As AI systems increasingly rely on external models, libraries, and code packages, the supply chain has become a critical attack vector. TotalAI introduces continuous monitoring to detect package hallucination attacks, where LLMs are tricked into recommending non-existent (but malicious) third-party packages. By identifying and blocking these threats early, organizations can prevent model theft, maintain software integrity, and protect sensitive data from unauthorized exfiltration or tampering. - Multimodal Threat Coverage:

In today’s AI environment, threats don’t only come through text. TotalAI’s enhanced multimodal detection identifies prompts or perturbations hidden inside images, audio, and video files that are designed to manipulate LLM outputs. This helps organizations safeguard against subtle, cross-modal exploits — ensuring that models do not leak private information, produce unsafe outputs, or behave unpredictably when encountering manipulated media inputs. - Universal Endpoint Scanning:

With the explosion of AI service providers, it’s essential to secure models no matter where they are hosted. TotalAI now offers one-click security assessments for any LLM exposing an OpenAI-compatible chat-completion API, including AWS Bedrock, Azure AI, Hugging Face, Google Vertex AI, and self-hosted deployments. This enables scalable, consistent risk monitoring across diverse AI environments — helping enterprises maintain a uniform security posture whether models are hosted in the public cloud, private cloud, or on-premises.

With these enhancements, TotalAI becomes the industry’s most comprehensive solution for securing the AI lifecycle — from early development through real-world deployment.

Benefits to Customers

These major new enhancements bring tangible benefits to security, development, and compliance teams:

- Faster, Safer AI Application Development: The internal on-premises scanner allows teams to shift security left, embedding model risk assessments earlier into the CI/CD pipeline — improving both agility and security posture.

- Seamless Integration for Existing Customers: Organizations that have already deployed Qualys scanners or agents can easily incorporate TotalAI’s LLM and AI security capabilities — without rearchitecting or redeploying new infrastructure. This accelerates time to value while maintaining data residency and control.

- Comprehensive MLOps Protection: By securing AI infrastructure and LLMs across the full pipeline — from dev to deployment — TotalAI reduces the chance of blind spots and ensures regulatory readiness.

- Enhanced Defense Against Emerging AI Threats: With expanded jailbreak and multimodal detection, businesses stay protected against evolving adversarial tactics designed to manipulate, exfiltrate, or weaponize AI models.

The result: a secure foundation for AI innovation, backed by continuous risk visibility, proactive defense, and seamless operational integration.

Why It Matters Now

As every business becomes an AI business, the stakes have never been higher. Enterprises need to move quickly to secure not just their models, but the infrastructure, code, and processes that support them. Delaying AI risk management invites reputational damage, regulatory penalties, and business disruption.

TotalAI provides the visibility, intelligence, and automation needed to stay ahead. It eliminates blind spots, empowers faster remediation, and integrates natively with the broader Qualys Cloud Platform — unifying AI security with your broader cybersecurity strategy.

AI is increasing the efficiency of businesses in enterprises at various levels. Don’t let security be the reason it fails. Explore Qualys TotalAI and learn how to safeguard your models, infrastructure, and innovation pipeline — from dev to deployment.